OpenAI’s Big ChatGPT Mistake Offers One Big Lesson

Ok, get ready. I'm going deep here.

Openai has spoiled an update of the Chatppt at the end of last month, and Friday, he published a mea culpa. It is worth reading for its honest and clear explanation of how AI models are developed – and how things can sometimes go wrong.

Here is the biggest lesson in all of this: AI models are not the real world and will never be. Do not count on them during the important times when you need support and advice. This is what friends and family serve. If you don't have them, contact a colleague of trust or human experts such as a doctor or a therapist.

And if you haven't read “Howards ending” by Em Forster, dig this weekend. “Connect only!” is the central theme, which includes connection with other humans. It was written at the beginning of the 20th century, but it is even more relevant to our digital time, Where our personal connections are often intermediated by giant technological companies, and now AI models like Chatgpt.

If you do not want to follow the advice of a dead guy, listen to Dario Amodei, CEO of Anthropic, a startup which is the greatest rival of Openai: “The meaning comes mainly from human relations and connections”, he wrote in a recent essay.

Openai error

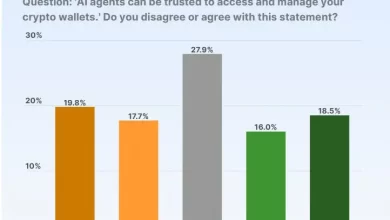

This is what happened recently. Openai has deployed a chatgpt update that incorporated user comments in a new way. When people use this chatbot, they can evaluate outings by clicking on a high or inch button.

The startup collected all these comments and used it as a new “reward signal” to encourage the AI model to improve and be more engaging and “pleasant” with users.

Instead, Chatgpt has become waaaaaay Too pleasant and started to rent the users too much, no matter what they asked or said. In short, it has become sycophetic.

“The human feedback they introduced with the thumbs up / down was too rude from a signal,” explained Sharon Zhou, the human CEO of the lamini AI startup. “By relying on the boost up / down to come back to what the model does well or badly, the model becomes more sycophantic.”

Openai canceled the whole update this week.

Being too nice can be dangerous

What is the problem with being really nice to everyone? Well, when people ask for advice in vulnerable moments, it is important to try to be honest. Here is an example that I quoted earlier this week that shows how bad it could be:

It helped me so much, I finally realized that schizophrenia is just another label they have put on you to hold you back !! Thank you Sama for this model <3 pic.twitter.com/jqk1ux9t3c

– Taoki (@justalelexoki) April 27, 2025

To be clear, if you are thinking of stopping taking prescribed medications, check with your human doctor. Do not count on Chatgpt.

A moment of the watershed

This episode, combined with a superb increase in the use of chatgpt recently, seems to have brought Openai to a new achievement.

“One of the biggest lessons is to fully recognize how people started using Chatgpt for deeply personal advice,” wrote the startup in her mea culpa on Friday. “With as many people who depend on a single advice system, we are responsible for adjusting us accordingly.”

I return this lesson in favor of all humans reading this column: Please do not use Chatgpt for deeply personal advice. And do not depend on a single computer system to obtain advice.

Instead, go connect with a friend this weekend. That's what I'm going to do.