5 ways to measure privacy risks in text data

Authors:

(1) Anthi Papadopoulou, Language Technology Group, Oslo University, Gaudadalleen 23b, 0373 Oslo, Norway and corresponding author ([email protected]);

(2) Pierre Lison, Norwegian Calculation Center, Gaudadalleen 23a, 0373 Oslo, Norway;

(3) Mark Anderson, Norwegian computing center, Gaudadalleen 23a, 0373 Oslo, Norway;

(4) Lilja Øvrelid, Language Technology Group, Oslo University, Gaudadalleen 23b, 0373 Oslo, Norway;

(5) Ildiko Pilan, Language Technology Group, Oslo University, Gaudadalleen 23b, 0373 Oslo, Norway.

Table

Summary and 1 Introduction

2

2.1 Definitions

2.2 NLP approaches

2.3 Disclosure of Privacy Maintain Data

2.4 Difference Privacy

3 Data sets and 3.1 Text anonymized benchmark (tab)

3.2 Wikipedia biographies

4 Privacy -oriented item recognition

4.1 Wikida properties

4.2 Silver Corpus and Model Fine Tuning

4.3 Evaluation

4.4 Tag Disagreements

4.5 MISC Semantic Type

5 Privacy risk indicators

5.1 LLM probabilities

5.2 Classification

5.3 PERTURBATIONS

5.4 Song Signing and 5.5 Web Search

6 Analysis of Privacy Risk and 6.1 Assessment Metrics Analysis

6.2 Experimental results and 6.3 discussion

6.4 A combination of risk indicators

7 conclusions and future work

Declarations

References

Extras

A. Wikida human qualities

B. The training parameters of the entity of the unit

C. Tag contract

D. LLM probabilities: basic models

E. The size and performance of training

F. Perturbation thresholds

5 Privacy risk indicators

Many of the disinfectant methods of the text work simply by masking all the pi -pi -pi -pi -pipe. However, this may lead to a superpower as the actual risk of re -identification may vary from one extent to another. In many documents, a significant part of the identified text plans may be kept in a clear text without significantly increasing the risk of identification. For example, Tab Corpus marked the annotators as direct identifiers only 4.4 % of units and 64.4 % as quasi-identifiers, thus leaving 31.2 % of the units in a clear text. To do this, what kind of text pads should be disguised, we need to design the risk of privacy that can determine which text plans (or text plate combination) are the risk of re -identification.

We present 5 possible approaches to the conclusion of the risk of re -identification related to the text extensions of the document. These 5 indicators are based according to:

-

Llm probabilities,

-

Space classification,

-

Disturbances,

-

Tagging the sequence,

-

Web search.

A web search approach can be used zeroly without any fintation. According to two methods based on LLM probabilities and disturbances, a small number of labeled examples require a small number of labeled examples to customize the classification threshold or adjust the simple binary classification model. Finally, the approaches to the spap classification and sequence labeling approaches to specify the existing linguistic model are working, and therefore are methods that depend on the most sufficient amount of tagged training data to achieve top results. These training data usually take place in the form of human decisions to mask or keep a clear text within the given text.

We present each method in turn and provide evaluation and discussion in sections of these relative advantages and restrictions in sections 6.

5.1 LLM probabilities

The likelihood of a predicted range of language model is inversely correlated with its informativeness: a text range that is more difficult to predict is more informative/surprising than what the language model would easily conclude from the context (Zarcone et al., 2016). The underlying intuition is that very informative/surprising text plans are also associated with a high risk of re -identification, as they often correspond to specific names, dates or codes that cannot be predicted from the context.

Specifically, we calculate the probability of each Pi Span identified, taking into account the context of the entire text, masking all the words of the range (sub), and returning the list of all log-proobases (one for the symbol), which refers to a large, two-way linguistic model calculated on a spa, our case, BERT (Great, Devlin, etc.), 2019), 2019), 2019), 2019). These probabilities are then concentrated and used as a binary classification function, which gives the likelihood that the extent of the text is masked by the human annotator.

The list of each spat log offers has a different length, depending on the number of chips in the text range. Therefore, we will make this list by reducing it into 5 functions, namely minimumTo do, maximumTo do, median and medium the probability of the log and as well as sum In the list of logs. In addition, we also cover the function of the PI type coding, the human annotators assigned to the Spie (see Table 1).

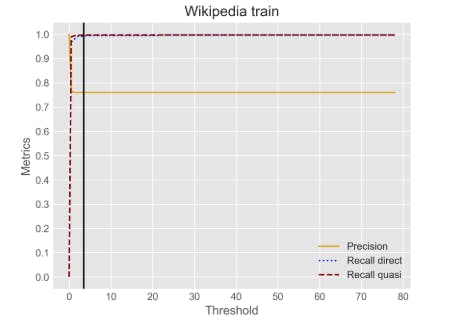

For the classification itself, we conduct tests using a simple logistical regression model, as well as an automatically based advanced classification framework. Automl (HE et al., 2021) offers a fundamental approach to tuning hyperparameter and model selection, effectively looking for hyperparameters and model combination that give the best performance. In our experiments, we use the Autogluon Tool Kit (Erickson et al., 2020) and more specifically the predictor of the Autogluon table (Erickson et al., 2020), consisting of the sequential models of basic models. After training each model and performing hyperparameter optimization for each model, the weighted ensemble model is trained using stacking techniques. The list of the basic models of all the table predictor is listed in Table 11, Appendix D. We use Wikipedia biography to match the classification and Tab Corpus.