Magic decoding: how machines speak human language

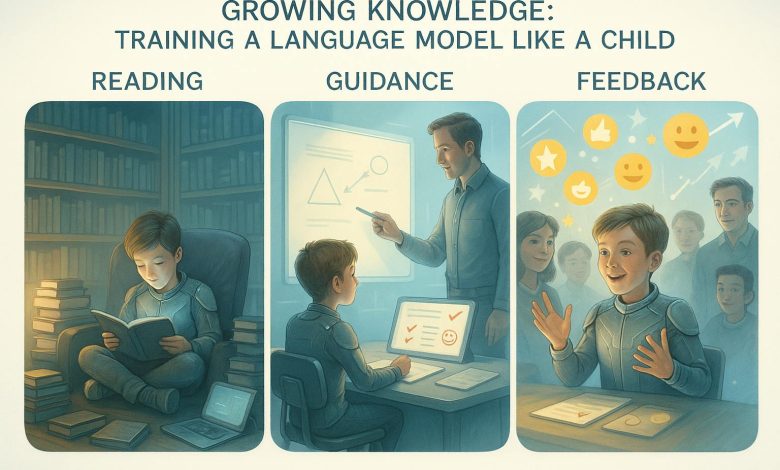

Training a large language model is like teaching someone talk and behaveTo. Imagine that the child learns the language and is gradually instructed to answer questions. We can divide this process into three basic stages, each with daily examples.

Step 1: Priority training guided by reading by reading

Think about a child who is growing, reading all the books available at the home library. Instead of always explaining things, the child learns the language naturally, listening, reading and understanding the context, grammar and vocabulary.

How it works in LLMS:

-

Learning from all kinds of texts:

The model “reads” a huge amount of text from books, websites and online forums. Just as a child chooses language patterns and ordinary phrases, the model learns how words combine meaningful sentences.

-

Predicting the following word:

Imagine filling out an empty game where the child must guess the missing word in the sentence. Over time, the child repeatedly begins to know which words are best suited. The model is trained to predict the next word in a sentence, helping it learn the structure and flow of the language.

Step 2: Proper and wrong learning-guided fine tuning

Now think that the same child has a teacher who guides them with the right behavior and acceptable language. The teacher shows children's examples of good answers and explains why certain answers are more appropriate than others.

The same concept works in LLMS:

-

Curated examples:

At this stage, engineers will give examples of questions and the right answers. It's like a teacher who offers model answers to normal questions.

-

Customization of the answer:

These good answers for imitation are specified. For example, if the model had previously been able to create harmful or inappropriate answers (just as a child may imitate the questionable language they listened to), it is now corrected by examples of what to say.

Analogy of real life:

Think about the scenario where the teacher explains why certain instructions – for example, “Don't Run in Corridors” – are important, even if a child could know how to run. Teacher guidance shapes the child's understanding of the right way of action or to respond.

Step 3: Feedback Learning – Strength Training on Human Feedback (RLHF)

Imagine that the child is now taking part in the class discussion. They talk, and the teacher and the classmates praise the delicate correction for immediate points and for the weak arguments. Over time, the child clarifies how they formulate ideas based on this feedback.

How it works in LLMS:

-

How many experiments and feedback:

The model generates several answers for this issue, similar to the student who offers different answers during the discussion. -

Reward system:

People's reviewers (acting as a teacher) receive these answers. Good answers earn “bonuses”, while bad answers receive lower grades. Think about it as a gold star system where every letter helps the student understand what they did well.

-

Customization of future answers:

Based on these grades, the model learns to prefer many grades. It is similar to learning a child, which is the most convincing or correct explanations.

-

Two methods – PPO and DPO:

-

PPO (proximal policy optimization):

Imagine a situation where the teacher not only gives the letters, but also monitors how closely the student's answers are suitable for ideal answers. This keeps the child's answers in balance between creativity and correctness.

-

DPO (Direct Preferences Optimization):

In the second classroom, the teacher simply praises feedback on the basis of general performance instead of monitoring each detail. It simplifies the process and works well when there is no time for detailed monitoring.

-

Reading for further users:

For those who are interested in making deeper methherergpt and RLHF (the main papers I focused on in this article), the following papers are recommended:

- Instruct the paper: Ouyang et al. (2022). This article highlights the alignment of language models with the intention of the user through fine -tuning. Link to the paper

- PPO and DPO Techniques:

- “Optimization of politics with RLHF – PPO/DPO/ORPO”, by Sulbha Jindal (2024). This article gives an overview of various policy optimization techniques in RLHF, including PPO and DPO. Link to the article

- “DPO and PPO unpack: disconnecting the best practices of learning preferences” (2024). This article deals with DPO and PPO differences and the best practices in the RLHF context. Link to the paper

These resources offer a thorough understanding of the techniques and progress in the training of LLMs to bring in line with human preferences.