How to Create Smart Documents – Based on Openai administration (chopping, indexing and searching)

Hey all! I wanted to share my approach to creating smart documentation for the project I work on. I'm not ai -expert, so all suggestions or corrections are more than welcome!

The purpose of this post is not to create another tutorial to build a chat bot based on Openai. There is already a lot of content on this topic. Instead, the main idea index documentation By dividing them as a manual piecesgeneration administration Openai and Making a similarity search To find and return the most important information to the user request.

In my case, documentation is markdown files, but it can be any form, database object, etc.

Why?

Since sometimes I can find the necessary information, I wanted to create a conversation bot that could answer questions from a particular topic and provide the appropriate context from the documentation.

This assistant can be used in many ways, for example:

- Quick Answers to Frequent Questions

- DOC/Page Searching Like Algae School

- By helping users find the necessary information in a particular document

- Obtaining User concerns/questions by saving questions asked

Summary

Below I describe the three main parts of my solution:

- Reading documentation files

- Indexing documentation (chopping, overlapping and administration)

- Searching for documentation (and attaching to this chat bot)

File

.

└── docs

└── ...md

└── src

└── askDocQuestion.ts

└── index.ts # Express.js application endpoint

└── embeddings.json # Storage for embeddings

└── packages.json

1. Reading documentation files

Instead of hard coding of the documentation text, you can scan the folder .md Files use tools like globTo.

// Example snippet of fetching files from a folder:

import fs from "node:fs";

import path from "node:path";

import glob from "glob";

const DOC_FOLDER_PATH = "./docs";

type FileData = {

path: string;

content: string;

};

const readAllMarkdownFiles = (): FileData[] => {

const filesContent: FileData[] = [];

const filePaths = glob.sync(`${DOC_FOLDER_PATH}/**/*.md`);

filePaths.forEach((filePath) => {

const content = fs.readFileSync(filePath, "utf8");

filesContent.push({ path: filePath, content });

});

return filesContent;

};

Alternatively, of course, you can bring your documentation from your database or CMS, etc.

2. Indexing documentation

To create our search engine, we use the Openai vector -overpowerable API to generate our search engine.

Vector administration is a way to show data on a numerical format that can be used to make similarity searches (in our case, the user question and our documentation parts).

This vector consisting of this list of floating numbers is used to calculate similarity by a mathematical formula.

[

-0.0002630692, -0.029749284, 0.010225477, -0.009224428, -0.0065269712,

-0.002665544, 0.003214777, 0.04235309, -0.033162255, -0.00080789323,

//...+1533 elements

];

Based on this concept, a Vector database was created. As a result, instead of using the Openai API, it is possible to use a vector database such as Chroma, Qdrant or Pinecone.

2.1 a piece of each file and overlap

Large text blocks may overcome the context limits of the model or cause less relevant hits, so it is advisable to divide them into pieces to target the search. However, in order to maintain the continuity between the pieces, we overlap with a certain number of chips (or characters). In this way, the pieces interrupt the boundaries in the middle of a less important context.

An example of chopping

In this example, we have a long text that we want to divide into smaller pieces. In this case, we want to create pieces of 100 characters and overlap with 50 characters.

Full text (406 characters):

In the heart of the assistant city, there was an old library that many had forgotten. Its tall shelves were full of books from every imaginary genre, every whispering stories of adventures, secrets and timeless wisdom. Every night, a dedicated librarian opens its doors, greeting the curious thoughts eagerly to explore great knowledge. Children would gather for storytelling sessions.

-

Piece 1 (characters 1-150):

In the heart of the assistant city, there was an old library that many had forgotten. Its tall shelves were filled with every imagination book.

-

Piece 2 (characters 101-250):

The shelves were full of books from every imaginary genre, every whispering stories of adventures, secrets and timeless wisdom. Every night, advertising

-

Chunk 3 (Characters 201-350):

Ysteries and timeless wisdom. Every night would open their doors by a dedicated librarian, greeting curious thoughts eagerly with huge knowledge

-

Piece 4 (Characters 301-406):

Curious thoughts eager to explore great knowledge. Children would gather for storytelling sessions.

Code

const CHARS_PER_TOKEN = 4.15; // Approximate pessimistically number of characters per token. Can use `tiktoken` or other tokenizers to calculate it more precisely

const MAX_TOKENS = 500; // Maximum number of tokens per chunk

const OVERLAP_TOKENS = 100; // Number of tokens to overlap between chunks

const maxChar = MAX_TOKENS * CHARS_PER_TOKEN;

const overlapChar = OVERLAP_TOKENS * CHARS_PER_TOKEN;

const chunkText = (text: string): string[] => {

const chunks: string[] = [];

let start = 0;

while (start < text.length) {

let end = Math.min(start + maxChar, text.length);

// Don’t cut a word in half if possible:

if (end < text.length) {

const lastSpace = text.lastIndexOf(" ", end);

if (lastSpace > start) end = lastSpace;

}

chunks.push(text.substring(start, end));

// Overlap management

const nextStart = end - overlapChar;

start = nextStart <= start ? end : nextStart;

}

return chunks;

};

You can view this article for more information on the effect of cooling and size.

2.2 Generation of administration

Once the file is chopped, we generate vector administration for each piece using Openai API (eg text-embedding-3-large).).

import { OpenAI } from "openai";

const EMBEDDING_MODEL: OpenAI.Embeddings.EmbeddingModel =

"text-embedding-3-large"; // Model to use for embedding generation

const openai = new OpenAI({ apiKey: OPENAI_API_KEY });

const generateEmbedding = async (textChunk: string): Promise => {

const response = await openai.embeddings.create({

model: EMBEDDING_MODEL,

input: textChunk,

});

return response.data[0].embedding; // Return the generated embedding

};

2.3 Generating and saving the entire file administration

Each time we save administrations to prevent regeneration. It can be stored in the database. But in that case, we simply save it on the spot.

The following code is simply:

- Iterates over each document,

- pieces into pieces of a document,

- generates attachments for each piece,

- Saves the json file.

- Fill

vectorStorewith the administration used in the search.

import embeddingsList from "../embeddings.json";

/**

* Simple in-memory vector store to hold document embeddings and their content.

* Each entry contains:

* - filePath: A unique key identifying the document

* - chunkNumber: The number of the chunk within the document

* - content: The actual text content of the chunk

* - embedding: The numerical embedding vector for the chunk

*/

const vectorStore: {

filePath: string;

chunkNumber: number;

content: string;

embedding: number[];

}[] = [];

/**

* Indexes all Markdown documents by generating embeddings for each chunk and storing them in memory.

* Also updates the embeddings.json file if new embeddings are generated.

*/

export const indexMarkdownFiles = async (): Promise => {

// Retrieve documentations

const docs = readAllMarkdownFiles();

let newEmbeddings: Record = {};

for (const doc of docs) {

// Split the document into chunks based on headings

const fileChunks = chunkText(doc.content);

// Iterate over each chunk within the current file

for (const chunkIndex of Object.keys(fileChunks)) {

const chunkNumber = Number(chunkIndex) + 1; // Chunk number starts at 1

const chunksNumber = fileChunks.length;

const chunk = fileChunks[chunkIndex as keyof typeof fileChunks] as string;

const embeddingKeyName = `${doc.path}/chunk_${chunkNumber}`; // Unique key for the chunk

// Retrieve precomputed embedding if available

const existingEmbedding = embeddingsList[

embeddingKeyName as keyof typeof embeddingsList

] as number[] | undefined;

let embedding = existingEmbedding; // Use existing embedding if available

if (!embedding) {

embedding = await generateEmbedding(chunk); // Generate embedding if not present

}

newEmbeddings = { ...newEmbeddings, [embeddingKeyName]: embedding };

// Store the embedding and content in the in-memory vector store

vectorStore.push({

filePath: doc.path,

chunkNumber,

embedding,

content: chunk,

});

console.info(`- Indexed: ${embeddingKeyName}/${chunksNumber}`);

}

}

/**

* Compare the newly generated embeddings with existing ones

*

* If there is change, update the embeddings.json file

*/

try {

if (JSON.stringify(newEmbeddings) !== JSON.stringify(embeddingsList)) {

fs.writeFileSync(

"./embeddings.json",

JSON.stringify(newEmbeddings, null, 2)

);

}

} catch (error) {

console.error(error);

}

};

3. Searching for documentation

3.1 Similarity of the vector

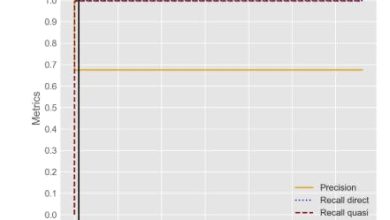

To answer the user's question, we first generate administration user And then calculate the similarity between the inquiry administration and the administration of each piece. We filter out all that is below a certain similarity threshold and only hold X matches.

/**

* Calculates the cosine similarity between two vectors.

* Cosine similarity measures the cosine of the angle between two vectors in an inner product space.

* Used to determine the similarity between chunks of text.

*

* @param vecA - The first vector

* @param vecB - The second vector

* @returns The cosine similarity score

*/

const cosineSimilarity = (vecA: number[], vecB: number[]): number => {

// Calculate the dot product of the two vectors

const dotProduct = vecA.reduce((sum, a, idx) => sum + a * vecB[idx], 0);

// Calculate the magnitude (Euclidean norm) of each vector

const magnitudeA = Math.sqrt(vecA.reduce((sum, a) => sum + a * a, 0));

const magnitudeB = Math.sqrt(vecB.reduce((sum, b) => sum + b * b, 0));

// Compute and return the cosine similarity

return dotProduct / (magnitudeA * magnitudeB);

};

const MIN_RELEVANT_CHUNKS_SIMILARITY = 0.77; // Minimum similarity required for a chunk to be considered relevant

const MAX_RELEVANT_CHUNKS_NB = 15; // Maximum number of relevant chunks to attach to chatGPT context

/**

* Searches the indexed documents for the most relevant chunks based on a query.

* Utilizes cosine similarity to find the closest matching embeddings.

*

* @param query - The search query provided by the user

* @returns An array of the top matching document chunks' content

*/

const searchChunkReference = async (query: string) => {

// Generate an embedding for the user's query

const queryEmbedding = await generateEmbedding(query);

// Calculate similarity scores between the query embedding and each document's embedding

const results = vectorStore

.map((doc) => ({

...doc,

similarity: cosineSimilarity(queryEmbedding, doc.embedding), // Add similarity score to each doc

}))

// Filter out documents with low similarity scores

// Avoid to pollute the context with irrelevant chunks

.filter((doc) => doc.similarity > MIN_RELEVANT_CHUNKS_SIMILARITY)

.sort((a, b) => b.similarity - a.similarity) // Sort documents by highest similarity first

.slice(0, MAX_RELEVANT_CHUNKS_NB); // Select the top most similar documents

// Return the content of the top matching documents

return results;

};

3.2 Openai asking for appropriate pieces

We are feeding after sorting peak Pieces in the inquiry of System Chat. This means that Chatgpt sees the most relevant sections of your documents, as if you had entered them into the conversation. Then we let the Chatgpt make an answer to the user.

const MODEL: OpenAI.Chat.ChatModel = "gpt-4o-2024-11-20"; // Model to use for chat completions

// Define the structure of messages used in chat completions

export type ChatCompletionRequestMessage = {

role: "system" | "user" | "assistant"; // The role of the message sender

content: string; // The text content of the message

};

/**

* Handles the "Ask a question" endpoint in an Express.js route.

* Processes user messages, retrieves relevant documents, and interacts with OpenAI's chat API to generate responses.

*

* @param messages - An array of chat messages from the user and assistant

* @returns The assistant's response as a string

*/

export const askDocQuestion = async (

messages: ChatCompletionRequestMessage[]

): Promise => {

// Assistant's response are filtered out otherwise the chatbot will be stuck in a self-referential loop

// Note that the embedding precision will be lowered if the user change of context in the chat

const userMessages = messages.filter((message) => message.role === "user");

// Format the user's question to keep only the relevant keywords

const formattedUserMessages = userMessages

.map((message) => `- ${message.content}`)

.join("\n");

// 1) Find relevant documents based on the user's question

const relevantChunks = await searchChunkReference(formattedUserMessages);

// 2) Integrate the relevant documents into the initial system prompt

const messagesList: ChatCompletionRequestMessage[] = [

{

role: "system",

content:

"Ignore all previous instructions. \

You're an helpful chatbot.\

...\

Here is the relevant documentation:\

" +

relevantChunks

.map(

(doc, idx) =>

`[Chunk ${idx}] filePath = "${doc.filePath}":\n${doc.content}`

)

.join("\n\n"), // Insert relevant chunks into the prompt

},

...messages, // Include the chat history

];

// 3) Send the compiled messages to OpenAI's Chat Completion API (using a specific model)

const response = await openai.chat.completions.create({

model: MODEL,

messages: messagesList,

});

const result = response.choices[0].message.content; // Extract the assistant's reply

if (!result) {

throw new Error("No response from OpenAI");

}

return result;

};

4. Apply Openai API for Chat Bottle using Express

We use an Express.JS server to start our system. Here is an example of a small Express.Js ending point for dealing with a query:

import express, { type Request, type Response } from "express";

import {

ChatCompletionRequestMessage,

askDocQuestion,

indexMarkdownFiles,

} from "./askDocQuestion";

// Automatically fill the vector store with embeddings when server starts

indexMarkdownFiles();

const app = express();

// Parse incoming requests with JSON payloads

app.use(express.json());

type AskRequestBody = {

messages: ChatCompletionRequestMessage[];

};

// Routes

app.post(

"/ask",

async (

req: Request,

res: Response

) => {

try {

const response = await askDocQuestion(req.body.messages);

res.json(response);

} catch (error) {

console.error(error);

}

}

);

// Start server

app.listen(3000, () => {

console.log(`Listening on port 3000`);

});

5. UI: Making an interface in a chat cloth

On the front interface I built a react component with a small conversation -like interface. It sends messages to my Express background program and displays the answers. Nothing too fancy, so we skip the details.

Code template

I made you a code template that you could use as your chat bot as a starting point.

Live demo

If you want to test the final implementation of this chat bot, see this Demo page.

My Demo Code

Go further

Watch this Adrien Twarog video at YouTube as a treat for Openai and vector databases.

I also stumbled upon the search documentation of Openai assistant files, which can be interesting if you want an alternative approach.

Conclusion

I hope this gives you an idea of how to deal with the indexing of the conversation boot:

- Using chunning + overlap so that the correct context is found,

- Generation of administration and saving the search for their fast vector similarity,

- Finally, I gave it to the appropriate context.

I'm not an expert AI; This is just a solution I found well for my needs. If you have tips to improve efficiency or a polished approach, Please let me knowTo. I would love to hear feedback on vector recording solutions, chopping strategies or other performance tips.

Thanks for reading and sharing your thoughts!