Those with -set:

(1) Rafael Kuffner Dos Anjos;

(2) Joao Madeiras Pereira.

Link

Abstract and 1 Introduction

2 relevant work and 2.1 virtual avatar

2.2 Point Cloud Visualization

3 test design and 3.1 setup

3.2 User Representations

3.3 Procedure

3.4 virtual environment and 3.5 descriptions of tasks

3.6 Questions and 3.7 Participants

4 results and discussions, and 4.1 user preferences

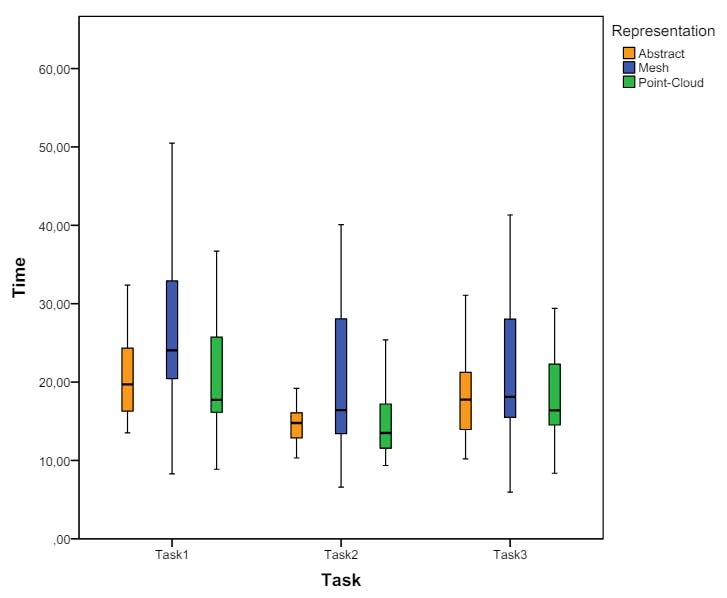

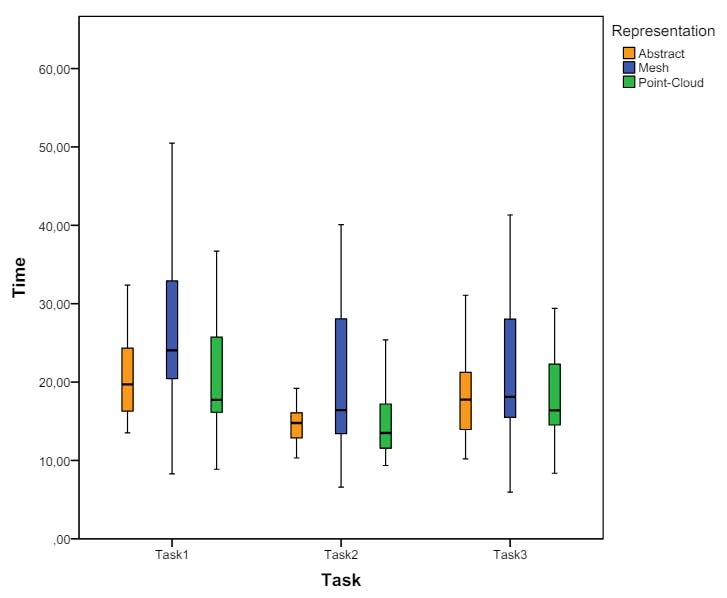

4.2 Performance of the work

4.3 Discussion

5 Conclusions and References

5 conclusions

The use of displayed mounted heads brings the user's self, causing a reduction in the feeling of existence in virtual reality session.A way of overcome this problem is by using self-embodied avatar that improves the existence and overall estimate of the distance to VR setups. Some factors that may influence the sense of symbol is the authenticity and perspective that the avatar views (in the first or third perspective). The authenticity of the avatars is affected by the eternal valley that also influences self-embodied avatars. Although, the effects of both realism and perspectives are not yet fully underlined in literature when it comes to selfembodied avatar. For we used three different representations that vary in its authenticity with both views (1pp and 3pp) following the impact of the Uncanny Valley, which differentiates from an abstract to a realistic representation. For realistic representation we used a point-cloud avatar, which uses an affordable deep sensor to map the information of real users to a user who is raised within the virtual environment. To assess each of the combinations of representation-Perspective, we chose natural activities such as walking while avoiding barriers and getting discarded objects.

As a result of statistically analysis, discussion and evaluation of results, we propose the following guidelines regarding body representation and camera views for the Virtual Reality Application:

• The impact of the Uncanny Valley is more prevalent in the third human perspective, and it influences the efficiency of the time of navigation activities.

• In a first-person perspective (1pp), the impact of the Uncanny Valley is noted only in activities where a higher embodiment feels (e.g. Reflex-based tasks).

• To avoid the impact of the Uncanny Valley, one must use either a simplified (abstract) or a realistic (point-cloud) representation of the participant.

• Using a more realistic representation (point-cloud) and perspective (1pp) can lead to faster implementation times, but lower effectiveness in implementing tasks.

• A third-year perspective is recommended when spatial awareness is required in the horizontal navigation plane, with minimal advantage when using a realistic representation.

• Avoiding obstacles and reaching moving objects can be a problem when using a realistic representation due to occlusions created by visually representation.

• The use of the users' balance is negatively affected by a thirteenthperson perspective.

• Reflex -based tasks have a better performance when using a first person's perspective.

However, we cannot clarify some aspects of this study. In particular, avoiding barriers to vertical planes, and how poor distance estimation can influence these activities, and if the body representation has an impact on this matter. Also, the impact of the Uncanny Valley on reflex -based activities is detected, but requires further analysis using a variety of stimulus.

Continuing, various activities should be considered for both views and avatar, such as collaboration between different users, social environments, and communication activities.

References

[1] M. Alexa, J. Behr, D. Cohen-OR, S. Fleishman, D. Levin, and CT Silva. Computing and rendering point set surface. Visualization and Computer Graphics, IEEE Transaction in, 9 (1): 3-15, January 2003.

[2] D. Bonatto, S. Rogge, A. Schenkel, R. Ercek, and G. Lafruit. Research for real-time point cloud rendering of natural virtual reality scenes. At the 2016 International Conference at 3D Imaging (IC3D), pages 1–7, Dec 2016.

[3] M. Botsch, A. Hornung, M. Zwicker, and L. Kobbelt. High quality splating over the GPUs today. In M. Alexa, S. Rusinankiewicz, M. Pauly, and M. Zwicker, editors, Eurographics Symposium at Pointbased Graphics (2005). The Eurographics Association, 2005.

[4] M. Botsch, A. Wiratanaya, and L. Kobbelt. Great high quality rendering point sample geometry. In the continuation of the 13th Eurographic Workshop in rendering, EGRW '02, pages 53–64, Aire-Laville, Switzerland, Switzerland, 2002. Eurography Association.

[5] M. Botvinick, J. Cohen, et al. Rubber hands' Feel'Touch seen by eyes. Nature, 391 (6669): 756–756, 1998.

[6] R. Boulic, D. Maupu, and D. Thalmann. In scaling techniques for full-body postural control of virtual mannequins. Interacting with computers, 21 (1): 11–25, 2009.

[7] Hg Debarba, E. Molla, B. Herbelin, and R. Boulic. The recognition of the first and third perspectives of human perspective. In 3D user interfaces (3dui), 2015 IEEE Symposium on, pages 67- 72. IEEE, 2015.

[8] M. Dou, S. Khamis, Y. Degtyev, P. Davidson, SR Fanello, A. Kowdle, So Escolano, C. Rhemann, D. Kim, J. Taylor, P. Kohli, V. Tankovich, and S. Izadi. Fusion4D: Getting the performance of real-time challenges scenes. ACM Trans. Graph., 35 (4): 114: 1–114: 13, July 2016.

[9] Hh ehrson. The experimental induction of outside body experiences. Science, 317 (5841): 1048–1048, 2007.

[10] R. Fabio et al. From the point cloud to the surface: the problem of modeling and recollection. International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, 34 (5): W10 -W10, 2003.

[11] M. Gopi, S. Krishnan, and C. Silva. Rebuilding of the surface based on the lower dimensional localized triangle of Delaunay. Computer Graphics Forum, 19 (3): 467–478, 2000.

[12] M. Gopi, S. Krishnan, and CT Silva. Rebuilding of the surface based on the lower dimensional localized triangle of Delaunay. In the computer graphics forum, volume of 19, pages 467–478, 2000.

[13] V. Interrante, B. Ries, and L. Anderson. Distance distance to the virtual environment, revised. At the IEEE Virtual Reality Conference (VR 2006), pages 3–10. IEEE, 2006.

[14] S. Katz, A. Tal, and R. Basri. Direct visibility of point sets. ACM Trans. Graph., 26 (3), July 2007.

[15] M. Kazhdan, M. Bolitho, and H. Hoppe. Poisson Surface Reconstruction. In the continuation of the fourth Eurographic Symposium in Geometry Processing, SGP '06, pages 61-70, Aire-La-Ville, Switzerland, Switzerland, 2006. Eurography Association.

[16] K. Kilteni, R. Groten, and M. Slater. The feeling of embodiment to virtual reality. Presence: Teleoperators and Virtual environments, 21 (4): 373–387, 2012.

[17] T. Kosch, R. Boldt, M. Hoppe, P. Knierim, and M. Funk. Exploring the best point of view with the experiences of the third person outside the body. In procedings of the 10th International Conference on Pervasive Technologies related to Nature Aids (PETRA 2016). ACM, 2016.

[18] B. Lenggenhager, M. Mouthon, and O. Blanke. Spatial aspects of self -awareness in the body. Awareness and understanding, 18 (1): 110– 117, 2009.

[19] J.-L. Lugrin, J. Latt, and Me Latoschik. Avatar anthropomorphism and illusion of body owner in VR. In 2015 IEEE Virtual Reality (VR), pages 229–230. IEEE, 2015.

[20] J.-L. Lugrin, M. Wiedemann, D. Bieberstein, and Me Latoschik. Influence of avatar realism on the stressful situation in VR. 2015 IEEE Virtual Reality (VR), pages 227–228, 2015.

[21] M. Mori, KF MacDorman, and N. Kageki. The Uncanny Valley [from the field]. Ieee Robotics & Automation Magazine, 19 (2): 98–100, 2012.

[22] L. Piwek, LS McKay, and Fe Pollick. The empirical examination of the uncanny valley hypothesis failed to confirm the predicted effect of movement. Cognition, 130 (3): 271–277, 2014.

[23] R. Preiner, S. Jeschke, and M. Wimmer. Auto Splats: Dynamic Point Cloud Visualization in GPU. On EGPGV, pages 139–148, 2012.

[24] RS Renner, BM Velichkovsky, and JR Helmert. The perception of egocentric distances in the virtual environment-a review. ACM Computing Surveys (CSUR), 46 (2): 23, 2013.

[25] C. Ribeiro, R. Kuffner, C. Fernandes, and J. a. Pereira. 3D Annotation in Contemporary Dance: Improvement of the video annotator. In the continuation of the 3 -year International Symposium on Movement and Computing, Moco '16, New York, NY, USA, 2016. Association for Computing machinery.

[26] B. Ries, V. Interrante, M. Kaeding, and L. Anderson. The impact of self-neglect of the distance perception of the immersive virtual environment. During the continuation of the 2008 ACM Symposium on Virtual Reality Software and Technology, pages 167–170. ACM, 2008.

[27] S. RUSINKIEWICZ and M. Levoy. QSPLAT: A multiiresolution rendering system for large meshes. During the 27th annual conferences in Computer Graphics and Interactive Techniques, Siggraph '00, pages 343–352, New York, NY, USA, 2000. ACM Press/Addison-Wesley Publishing Co.

[28] P. Glass, T. Tadi, O. Blanke, F. Vexo, and D. Thalmann. Measuring the effects of exposure to the third and first-person perspective on virtualreality-based training. IEEE transactions to study technologies, 3 (3): 272–276, 2010.

[29] MV Sanchez-Vives, B. Spanlang, A. Frisoli, M. Bergamasco, and M. Slater. Virtual illusion of hand induced by visuomotor correlations. PLOS ONE, 5 (4): E10381, 2010.

[30] M. Slater, D. Perez Marcos, H. Ehrson, and MV Sanchez-Vives. ´ Towards a digital body: the virtual illusion of the arm. Human Neuroscience Frontiers, 2: 6, 2008.

[31] M. Slater, B. Spanlang, and D. Corominas. The virtual environments of virtual environments within virtual environments as the basis for a psychophysics of existence. ACM transactions in graphics (TOG), 29 (4): 92, 2010.

[32] M. Slater and M. Usoh. The body contact is centered on the virtual environment. Artificial life and virtual reality, 1: 125–148, 1994.

[33] La Westover. Splatting: a parallel, feed-forward volume rendering algorithm. PhD Thesis, University of North Carolina at Chapel Hill, 1991.

[34] Y. Yuan and A. Steed. Is the illusion of rubber hand forced by virtual reality? In 2010 IEEE Virtual Reality Conference (VR), pages 95–102. IEEE, 2010.

[35] M. Zwicker, J. Rasanen, M. Botsch, C. Dachsbacher, and M. Pauly. ¨ Perspective accurate splatting, 2004.