Testing users' presentation and avatar realism in the tasks of VR

Authors:

(1) Rafael Kuffner Dos Anjos;

(2) Joao Madeiras Pereira.

Table

Summary and 1 Introduction

2 related work and 2.1 virtual avatar

2.2 points in the cloud visualization

3 Test Design and 3.1 Setup

3.2 User Presentation

3.3 Methodology

3.4 Virtual environment and 3.5 Description of tasks

3.6 Questionnaires and 3.7 participants

4 Results and Discussion and 4.1 User Preferences

4.2 Fulfillment of the task

4.3 Discussion

5 conclusions and references

3 Test design

In addition, we explore the impact of realism and perspective on natural tasks. In this section, we describe the main aspects of representing users and designing the task experience. The following paragraphs are presented in the concept of the task, the performances of the Avatar and the setup used on the test task.

3.1 Setup

Because of the two main reasons, a large -scale setting was used; First, the fact that the Kinect sensor has its own effective range (0.4-4.5 m, if the skeletons lose 2.5 m reliability), and more space was required to properly assess the navigation task. If the user is within the limits of the sensors' working range, the quality of experience would be endangered, so that the wide setting of frame products ensures that the entire user of the user is always visible by at least one camera. Second, since the perspective of a third party is presented as one interaction paradigm, the whole participant's body must be visible all the time to avoid the performances of the presentation. The narrow baseline or one sensor setting would only capture half of the participant's body, threatening the experience.

The five sensors are fixed to the lab wall where the study was held, which covers the area of about 4 x 4 meters. As the proposed navigation tasks were mainly between the two goals, we mapped the environment so that the participant was always faced with the sensor at the time of completing the tasks, so that his / her hands were always visible to the first person's perspective and the third person's perspective. The physical setting chosen for our study can be seen in Figure 1.

3.2 User Presentation

We chose three different user presentations according to the known Uncanny Valley effect. Camera positioning in a third person's view is based on Kosch and others in the previous work. [17]where the camera is above the head of the user to ensure better spatial awareness.

In all the performances used, the location and rotation of the Kinect joints are mapped directly into the avatars using direct kinematics.

3.2.1 Summary

The first avatar is a simplified avatar performance consisting of abstract components. The spheres were used for each joint and

Head and cylinders for each bone connection. Only joints were represented by Microsoft Kinect. Figures 2a and 2b How this presentation is for the first and third perspectives of the first and third person (1PP and 3PP).

3.2.2 network

Another performance is a realistic mesh avatar that resembles a person. This presentation did not include the animation of the individual fingers as the Kinect sensor does not monitor them. Figures 2C and 2D show this presentation respectively for the first and third persons (1PP and 3PP).

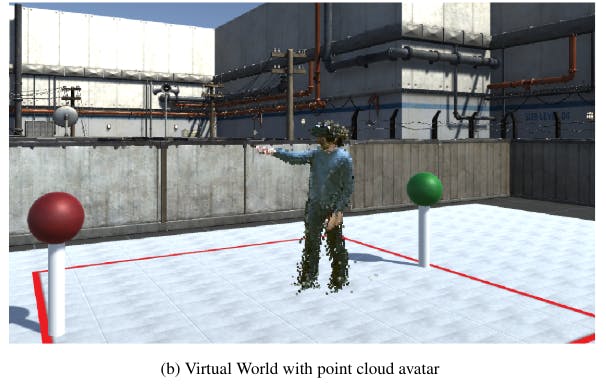

3.2.3 point cloud

This body presentation is based on a combination of separate point clouds of Microsoft Kinect sensors. Each single sensor first grabs each person's skeleton information in their field of view. After a combination of depth and color values seen with the camera, a point cloud is created and the points that are important to users are segmented from the background.

In many points of the interaction, different cameras send very similar information, and due to the timely nature of our problem, the integration or redundancy mode of different flows is not made. We decided to apply a simple decent technique that takes into account which parts of the body are more appropriate for the task. Using the skeleton information, we attach different priorities to each hinge according to the parameters defined by the user. For the virtual reality scenario, it was found that the information on the hands was more valuable and the main information was discarded.

Since each sensor can be associated with one computer, we transmit both skeletal and point cloud data to the Host computer where the app is running. For each point, we transmit (X, Y, Z, R, G, B, Q), which are 3D coordinates, color and one bit that shows high or low quality. This last bit is necessary in the Hostri application to adjust the rendering parameters. If higher quality points are more closely grouped, requiring smaller splic size, sub -selected areas must use larger splicons to create closed surfaces.

Each sensor position is previously configured after the calibration stage. The data read through the grid is then screened and rendered in the environment with a surface aligned with splids. For interaction purposes, the transmitted skeleton information is used. We can successfully screen the transmitted avatars and render 30 frames per second, allowing the user to communicate clean. Figures 2E and 2F show this presentation in the first and third person.