CPU claims and noisy neighbors

Ruin is a destination for all men in a hurry, each with their best interest in society that believes in the freedom of Commons. – Garrett Hardin

For some time in the past, I worked in a company where my host almost everything from whether it was a good decision or not, deserves a separate discussion.

The case happened in the morning of the boxing day. In the rabbitmq queue we had a huge backwardness and the cluster had lost the quorum. The metrics looked right, but the nodes failed heartbeat. A connected machine for diagnosis, one look top He told the real story. %st was constantly high, alternating values between 70 and 80%; We experienced the noisy neighbor's effect.

Which dispute really is

In Linux or other, the CFS timer sees only virtual processors. However, the hypervisor juggles these VCPUs on a limited set of nuclei. If everyone wants to run at once, someone is waiting. In the VM, this waiting time is a surface as stolen (%).

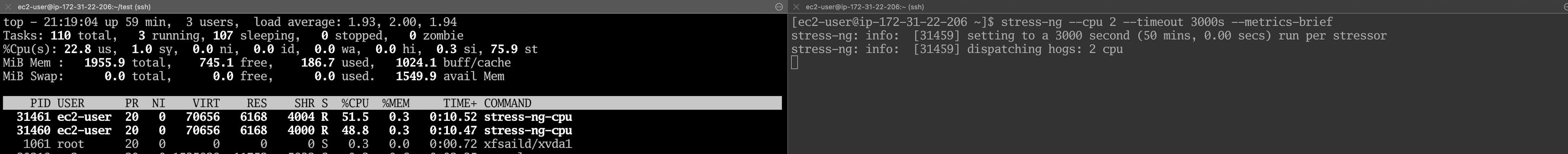

Simulation

We simulate the processor's dispute scenario, turn the AWS T3.Small (2 VCPU bursting) copies and both VCPUs pin-voltage-t.

stress-ng --cpu 2 --timeout 3000s --metrics-brief

Exploding copies are designed with a starting state of CPU, but it can burst with CPU credit. It is important to run stress-ng long

Enough before spending all CPU credits.

Then create and run a simple web application that responds with a heavy load.

package main

import (

"fmt"

"net/http"

"strings"

)

func handler(w http.ResponseWriter, r *http.Request) {

response := strings.Repeat("Hello, world! ", 8000) // about 100KB

fmt.Fprintln(w, response)

}

func main() {

http.HandleFunc("/", handler)

fmt.Println("Starting server on :8080...")

if err := http.ListenAndServe(":8080", nil); err != nil {

panic(err)

}

}

Generate the load and register the latency.

hey -n 10000 -c 200

Identification

The key is to observe how long the running thread is waiting for a physical core that it does not see. In practice, you combine three layers of evidence: VM levels, hypervisor or cloud telemetry and workload symptoms, then correlate.

VM-level counters

With any modern Linux Ami you have two first -class indicators:

%st(steal time) inmpstat -P ALL 1ortopTo. This is the percentage of a single-second interval, during which the hypervisor unintentionally Dischedulates the current VCPU. Continuous values over 5% on Nitro hardware or more than 2% in bursting T-Classes show a measurable dispute.

-

schedstatThe waiting time revealsperf sched timehistorperf schedule recordTo. This pursues each context switch by capturing the task spent in the microeconds. The workload, which usually shows a delay in the sub-second second, is displayed dozens of milliseconds in the event of a supernatural of the host.

An example of a 30-second snapshot:

# per-CPU steal

mpstat -P ALL 1 30 | awk '/^Average:/ && $2 ~ /^[0-9]+$/ { printf "cpu%-2s %.1f%% steal\n", $2, $9}'

# scheduler wait histogram

sudo perf sched record -a -- sleep 30

sudo perf sched timehist --summary --state

Search to steal nails that correspond to the correct shift in the delay distribution.

Hypervisor and cloud telemetry

AWS publishes two derived metrics that map directly to the CPU claim:

CPUSurplusCreditBalance(bursting cases). When the credit balance falls below zero, the hypervisor repels the initial percentage of the VCPU, causing immediate steal growth.

CPUCreditUsageflatCPUUtilizationTo. If the use of uses, but the use of VM does not do so, you will hit the ceiling rather than the exhausting work. In families of calculatized, allow detailed tracking options and swallowing%stealsinceInstanceIdDimension through the Cloudwatch agent.

Correlated with symptoms of workload

Latency -sensitive services often reveal a sharp inflection: the average latency remains evenly, while the P95 and P99 are different. Exceed these percentages with a percentage of stealing and ready-to-be time to confirm causality. During a real debate, the plateau is usually throughput, as each application is still completed, but it just waits longer for computing. If the capacity collapses, the bottleneck may be elsewhere (I/O or memory).

Improved tracking

For periodic cases that last seconds, confirm the EBPF -Sonde:

sudo timeout 60 /usr/share/bpftrace/tools/runqlen.bt

The expanding histogram of Run-water length CPUs is currently the final proof of the cpus over surfaces of the latency of service.

Warning thresholds

Empirically, warning if:

Use And condition to prevent false positive results; Stealing without a user visible latency may be tolerated for batches.

Alleviation

The first decision is an instance of the family. Calculation -optimized or optimized generations of memory leave a higher schedule for VCPU than bursting classes.

If the requirement is predictable latency, avoid a special copy of the full -overstroke of families or to remove the variability of several tenants.

Also how cases are placed is important. The spreading groups of the spread reduce the likelihood that two heavy tenants will land on the same host; The cluster groups improve the East-West bandwidth but can increase the risk of a box with a CPU-fan neighbor. For latency -sensitive fleets, the distribution policy is usually safer.

Build an automated response for an abnormality. Lightweight lambda can be interviewed in Cloudwatch %steal and restart the copy. Because the statement tends to be a host, recycling often resolves the case within minutes.

if metric("CPUStealPercent") > 10 and age(instance) > 5min:

cordon_from_alb(instance)

instance.reboot(instance)

In the governed governors, the same ideas apply. Distribute critical pods with identical applications and limits so that the timer determines the quality of the service guaranteed service, confirm them with the spread of topology to prevent noisy neighbors and restrict opportunistic batches with CPU quotas to avoid self -related dispute.

If the policy controls are inadequate, the rest of the lever is vertical. Excess VCPU for latency-critical services; Additional costs may be lower than alternative costs.

If convenient, go with a bare metal.

Conclusion

The CPU claim is one of the most common and least visible performance risks in the cloud of several tenants. Because the hypervisor mediates access to physical cores, every guest sees only the projection of reality; By the time the application shifts the latency time, the root cause is already upstream in the era. Summary:

- Instrument's first -class dispute meters: Steal time and schedule awaiting the level of service.

- Choose the corresponding levels of insulation from the SLA from the calculated families to special rental and visit these options as the traffic habits evolve.

- Automatize repair, be it circulation copies, balancing or scaling power, so that corrective measures end faster when customers can perceive deterioration. When these practices are built into the platform, the CPU statement becomes a traceable variable rather than an operational surprise. The result is a predictable latency, less escalations and infrastructure that gracefully decompose under load, instead of an unpredictable neighbor to fail. The rest of the unpredictability becomes a business decision: pay for stronger isolation or design software that can withstand residual variations.