Censorship, Free Speech, and the Aftermath of the KIWI FARMS Disruption

Those with -set:

(1) ANH V. VU, University of Cambridge, Cambridge Cybercrime Center ([email protected]);

(2) Alice Hutchings, University of Cambridge, Cambridge Cybercrime Center ([email protected]);

(3) Ross Anderson, University of Cambridge, and University of Edinburgh ([email protected]).

Link

Abstract and 1 Introduction

2. Deptforming and the effects

2.1. Related work

2.2. The interruption of kiwi farms

3. methods, datasets, and ethics, and 3.1. Discussions on forum and imageeboard

3.2. Telegram chats and 3.3. Web traffic and trends in search of trends

3.4. Tweets made by the online community and 3.5. Data licensing

3.6. Ethical considerations

4. The impact on forum and traffic activity, and 4.1. The impact of major interruptions

4.2. Removal of the platform

4.3. Destruction of traffic

5. The effects of associated stakeholders and 5.1. The community that started the campaign

5.2. The responses to the industry

5.3. The forum operators

5.4. The members of the forum

6. Tensions, challenges, and implications and 6.1. The effectiveness of interruption

6.2. Censorship compared to free speech

6.3. The role of the content -moderation industry

6.4. Policy Implications

6.5. Limitations and jobs in the future

7. Conclusion, Recognition, and Reference

Appendix A.

6. Tensions, Challenges, and Implications

The interruption we reviewed may be the first time a series of infrastructure companies involved a collective effort to end a website. While depletion can reduce the spread of abusive content and safeguard people's mental and physical safety, and it is well-known on social-media platforms such as Facebook, doing so without a suitable process increases a lot of philosophical, ethical, legal, and practical issues. For this reason, Meta built her own oversight board.

6.1. The effectiveness of interruption

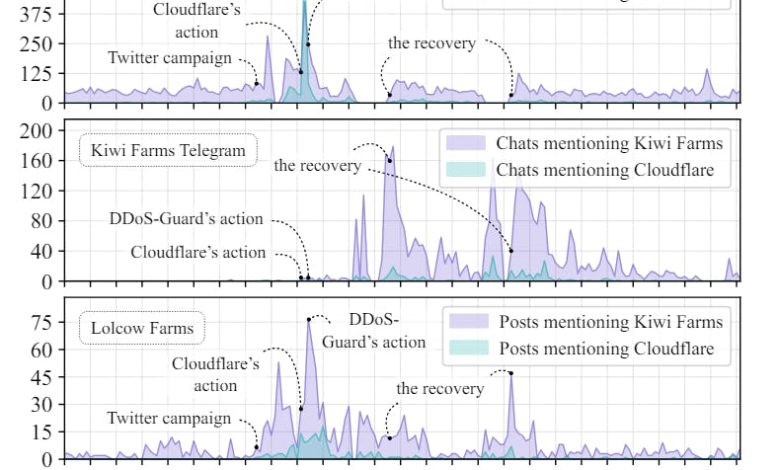

Disruption is more effective than previous DDOs attacks on the forum, as observed from our datasets. However the effect, though large, is short lived. While part of the activity was moved to the Telegram, half of the main members quickly returned after the forum was recovered. And while most casual users are shaken, others are turned to replace them. Cutting forum activity and half -users can be a success if the purpose of the campaign is to just hurt the forum, but if the goal is to “drop the forum”, it clearly fails. There is a lack of reality data in the realworld caused by forum members, such as online complaints or police reports, so we cannot measure if the campaign has an impact on avoiding physical and mental injuries caused by offline people.

Kiwi farms suffered additional DDOs and interruptions after our study period but managed to recover quickly, at some point reaching the same level of activity as before the interruption. It was then moved especially to the dark web in May-July 2023. The forum operator showed commitment and persistence despite the excessive interruption of DDOs attacks and infrastructure providers. He tried to restore Kiwi farms online to ClearNet in late July 2023 under a new Kiwifarms.Pl, protected by their DDOS Kiwiflare DDOs alleviation system, but the Clearnet version appears to be unstable.

A lesson is that while repeating interrupting the digital infrastructure can significantly reduce the activity of online communities, it can only be cut off, also mentioned in previous work [90]. The campaigns can also be stoned after a few weeks, while the interrupted community is more determined to recover their gathering area. Like reemergence and relocation of extremist forums like 8chan and Daily Stormer, Kiwi Farms is back online. It supports the argument that actually distracting online active platforms can be difficult, similar to the short -term impact of shutting down cybercrime markets [20]DDOS-for-hire services [14], [15]. [53], [54]. Removal of the one who is unaccompanied may not be sufficient to spread or suppress an unpleasant online community in the long run, even if the combined -joint action has taken a series of tech firms for months. It can weaken a community for a while by destroying their traffic and activity, and threatening casual observers, but it can also make it more determined and recruit new group members by streisand effect, where attempts in censorship can be self -defective [11], [91].

6.2. Censorship compared to free speech

A major factor may be if a community is capable of and motivation protectors that can continue to fight again by restoring interrupted services, or if they may somehow disable, by arresting, restraining or fatigue. It holds if the defenders are forum operators or distributed volunteers. So under what circumstances the law enforcement can do is decisive action to decompose an online forum, as did the FBI for example in well -known raid forums [18] and violations of forums [92]?

If some members of a forum destroy the law, are they a dissident organization with some bad actors, or a terrorist group to chase? Many difficult organizations attract children with headaches, from animal rights activists, protesters to climate change to union organizations are occasionally submerged by law. But if they are labeled as terrorists or extremists are often a political thing. People prefer to connsor with harmful information [93]But taking a website where an entire community hopes is often difficult to defend as a proportional and necessary law enforcement action. The threat of legal action can be counted by the operator that criticizes any specific crimes complained of. In this case, Kiwi Farms founder criticized SWAT attacks and other flawless criminality [55]. In fact, a competent provocateur will only stop at the point where their actions will call a vibrant police response.

The free speech protected by the US First Amendment [94] is in the clear security of the victims of harassment. The Supreme Court has over time established trials to determine what is protected by speech and what is not, including clear and current risk [95]a single tendency to motivate or cause illegal activity [96]preferred freedoms [97], [98]and compelling state interest [99];;;;;;;;;;;;;;;;;;;;;;; However, the line drawn between them is not always clear. Other countries are more strict, including France and Germany who prohibit Nazi symbolism and Turkey's ban on no respect for Mustafa Kemal Ataturk. In debates about the online ¨ Safety Bill currently before the UK Parliament, the government at a point has suggested to ban 'legal but harmful' speaking online, while not doing these activities that are not lawful [43]. These measures related to websites that encourage eating disorders or self -harm. Following the tragic suicide of a young girl [100]Tech companies are under pressure to conclude such material in the UK with their terms of service or by wearing their recommendation algorithms.

There are additional implications for obtaining platforms whose content is harmful but not clearly illegal. Companies' claim to do this, as suggested on the online Safety Bill, is strongly expanding the online content regulation. The UK law provides the censor's power to the head of the OFCOM, the broadcast regulator, which is a political appointment. It is predictable that it will lead to overblocking and invite the abuse of power of government officials or large tech firms, which can suppress legitimate voices or disagree with opinions. There is a clear risk of individuals or groups that are unfairly targeted for political or ideological reasons.