AI Agents in Finance: Game-Changer or a Risky Gamble?

Are the financial risk models fueled by AI prevented more than a billion dollars in fraud, but do AI models correspond to human expertise?

Financial crime and banking fraud are still evolving, fraudsters constantly finding new ways of thwarting financial institutions and slipping previous regulations. Traditional survey methods to mitigate crime require sieving through endless calculation sheets and transaction files – a process with a high intensity of labor which is often subject to human errors. Now, with the rise of agent artificial intelligence (AI), banks are looking to turn the trend, using intelligent automation to detect threats more precisely than ever.

In addition to adding a more personalized service, the possibility of predicting trends and a flashy customer experience, AI and automatic learning (ML) are also a “main application” for fraud detection. “AI algorithms can identify suspicious activities with unrivaled precision and speed”, according to a blog article by William Harmony, financial practice is in compliance and risk management founder.

Unlike conventional AI chatbots which oblige investigators to ask the right questions, AI agents independently collect evidence and recommend decisions. For example, Oracle

However, although AI has become an essential tool in detecting fraud and anti-money laundering efforts (LMA), it is as effective as the data on which it is formed. Joe Biddle, director of the British market at Trapets, warns that an excessive dependence at AI could lead to a false feeling of dangerous security. The company operates halfway between traditional manual processes and only AI.

“We do not have (currently) AI models that can detect new threats that are not part of their training data. Criminals would inevitably develop new tactics that did not come out of its scope,” Biddle said.

“If we were to rely solely on AI for the prevention of financial crime, we had to constantly recycle these systems just to follow.

The good and the evil of AI in the prevention of financial crime

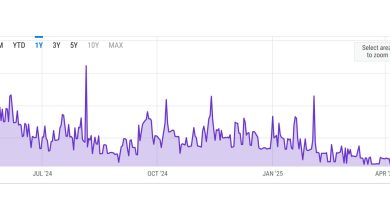

Between 2023 and 2024, the

However, many models of AI lack transparency and operate as “black boxes”, which makes it difficult for institutions to explain to regulatory organizations or to audit the way in which compliance decisions have been taken.

“Experts in financial crime can examine things like criminal reasons, global economic changes, geopolitical risks, etc., to analyze trends and predict emerging threats. AI does not have this level of insight, ”says Biddle. “If the banks become too dependent on AI, this can create huge problems with regard to compliance, because regulators must see clear reasoning for each action between a bank.”

In addition, as IA -based executives become more widespread, human investigators could lose their ability to recognize fraudulent behavior independently, creating a long -term knowledge lake.

“A reasonable starting point lies in very efficient employees with high control of interfunctional processes. These people can use them to create pilot projects that allow AI agents to learn to manage complex organizational workflows and the tasks of this delivery to fixed objectives “,”

“The AI simply follows the” rules “pre -established on the basis of the data on which it has been formed, it is therefore unable to explain each factor that has led to its ultimate decision, which is important for regulators. So, if an AI indicates a transaction as suspect, a human reviewer may not always be able to see the full logic chain behind this, ”said Biddle. “It is essential to have humans in the loop at each stage of the process.”

Financial institutions must remain smarter than their AI

Biddle underlines that AI should serve as an improvement in human expertise rather than a complete replacement.

“Institutions should have the expertise to be able to critically assess AI decisions instead of being satisfied by its confident responses. In the end, AI should be an additional protection layer in the fight against financial crime, not total defense,” said Biddle. “This guarantees that the institutions are not so dependent on these that they lose sight of the situation as a whole.”

It is also important to keep an eye on compliance in a rapidly evolving sector, according to the Phukan ribbon, CEO of Goodgist, a code-free platform that uses an agency AI for the productivity of the workplace.

“The AI is there, and this evolves quickly. With the rise of generative AI (Genai), it is essential that financial institutions are continuously updating their compliance executives – not only to stay ahead of regulatory changes but to follow clearly and explain how their AI systems work,” Phukan said.

“For example, the guarantee of Chatbot responses is correct and legally solid means that banks must examine the linguistic models formed and implement directives on how the content generated by AI is communicated to customers.”

For its part, Biddle claims that financial institutions must keep one step ahead by continuously implementing the AI defenses and ensuring that human surveillance remains in place.

“The best approach is to adopt a hybrid model that combines systems based on rules with AI tools – and always with strong human surveillance. Any model of AI used by banks must be tested continuous, refined and above all guided by human entry,” added Biddle. “Bank's risk and compliance teams should be trained at AI, or better still, AI experts are integrated into them, so that they understand how these models work and where they could fail.”