How Your Avatar Shapes Your Virtual Reality Experience

Authors:

(1) Rafael Kuffner Dos Anjos;

(2) Joao Madeiras Pereira.

Ties

Summary and 1 Introduction

2 related work and 2.1 virtual avatars

Visualization of the cloud of 2.2 points

3 Test design and 3.1 Configuration

3.2 User representations

3.3 Methodology

3.4 Virtual environment and 3.5 tasks Description

3.6 questionnaires and 3.7 participants

4 results and discussions, and 4.1 User preferences

4.2 Task performance

4.3 Discussion

5 conclusions and references

In this section, we present and discuss the related work concerning the representation of users. First of all, we introduce and define associated avatars and virtual concepts. Depending on, a brief review of techniques for visualizing points is discussed, and their use and viability in virtual reality configurations

2.1 Virtual avatars

An important part of the experience is the way users are represented on the virtual scene. Unlike the cave type systems, the use of display technology mounted on the head occurred the real self of users, compromising the overall virtual reality session. A way to overcome this problem is to use a fully incorporated representation of the user in the virtual environment [31]. A virtual body can provide them with a recognizable size reference and connectivity to the virtual environment [13, 26]Even if studies indicate that using a virtual body configuration can still lead to an underestimation of the distance [24]. The feeling of presence is linked to the concept of proprioception, which is the ability to detect stimuli occurring in the body concerning position, movement and balance. The feeling of incarnation in an avatar constitutes the meaning of presence in virtual reality (VR) and affects the way in which we interact with virtual elements [16]. This concept is subdivided into three components: the meaning of the agency, that is to say the feeling of motor control on the virtual body; (ii) the feeling of bodily property, that is to say the feeling that the virtual body is its own body; and (iii) self-localization, that is to say the experienced self-location.

The level of realism of the avatar also plays an important role in the VR experience and the way it relates to the sense of the embodiment of a user. A common problem on this subject is the strange valley [21]which indicates that the acceptability of an artificial character will not increase linearly in relation to its resemblance to the human form. Instead, after an initial increase in acceptability, there will be a pronounced decrease when the character is similar, but not identical to the human form. In addition, Piwek et al.[22] declare that the effect of realism in the deepest part of the valley becomes more acceptable when animated. The works of Lugrin et al. [19, 20] Also indicate that the strange valley also affects the feeling of presence and mode of realization of avatars in the first person (1PP) perspective when seen through a display mounted on the head.

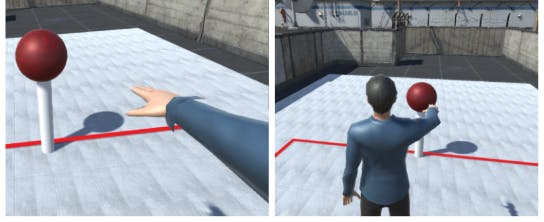

Another possibility when using an Auto-Embodé Avatar from the user is to modify the perspective that the avatar is viewed. This approach is normally used on video games to increase the spatial consciousness of the user during navigation and interaction on the scene. The meaning of body property is also possible when using artificial bodies (in real scenarios) and avatars (in virtual environment scenarios) in immersive configurations. An extra-corporeal classic experience is known by the illusion of the rubber hand (RHI) [5]. In this illusion, a subject is made to believe that a rubber hand is actually his own hand, which is hidden at sight, to the point of removing his own hand if the rubber hand is attacked. This illusion has similar effects in virtual reality configurations, which is called virtual illusion of the hand, and can be induced by visuotactile [30] and visuomotor synchrony [29, 34].

The illusion of rubber hand has also proven to be operating with a mode of realization of the body. Ehrrson et al. [9] Proves it by diffusing an image of the participant's body with an image of their body from a third -person perspective using a stereoscopic camera. LEGGENHAGER et al. [18] Confirm this using a similar configuration to prove that when you use a third-person perspective behind user bodies, users felt there, they saw the virtual body. In VR, the use of views of the third orthogonal third person was explored and has been recommended, for example, to help define the posture of a virtual body controlled by a movement [6].

Additional work by Salamin et al. Use an augmented reality configuration with a displaced camera and an HMD to show that the best perspective depends on the action carried out: first -person perspective (1PP) for manipulation of more precise objects and the prospect of the third person (3PP) for the movement of actions. Work at the same work has also shown that users have preferred the use of the 3PP compared to 1PP and needed less training in a ball capture scenario [28]. Additional work by Kosch et al. [17]Finding that the favorite point of view in a 3PP is behind the user's head, offering a real third -person experience. An underestimation of the distance is also present when the avatar is seen on a third-person perspective [28].

Visualization of the cloud of 2.2 points

The main challenge when it comes to punctual visualization is the unstructured nature of data and its rarity. The punctual clouds of rendering with punctual primitives has several drawbacks compared to other techniques (for example, the confusion of substance / foreground, the loss of definition on the close -ups), during the confrontation of a low -resolution scenario [1]. Katz and. Al [14] Resolution of the promotion of the leading confusion in the background by estimating the direct visibility of the points sets. However, in a mixed visualization scenario like that applied to this work, confusion still exists between the points rendered and for the body and the environment based on the mesh.

Surface reconstruction is the standard approach to view the clouds of points [10] with several successful techniques estimating the original surfaces of the points [12, 15]. A single depth flow can be easily Remesh using Delaunay triangulation [11]or multi-element merger can be carried out as indicated in the work of Dou et al. [8]. Although unique triangulation can be carried out in real time, multi-flax fusion can be a very consumer task that requires specialized equipment for data processing.

Splats lined up on screen [33] have been offered as a more effective alternative to the rendering of polygonal mesh [27]And are easily implemented in an interactive system, being the approach to go for the visualization of data in real time. The authors claim to have a visual appearance comparable to closed surfaces for visualization uses [4]. Although splashes aligned on the surface [23, 3, 35] Who create a better approximation of the surface, the normal estimate in real time can also be an expensive operation. The splashes have been used in the past for the visualization of cloud cloud clouds [2]But not in real -time reconstruction of the user body.