AI destroys what Reddit says

Is nothing holiday anymore?

Reddit is one of the last places on the Internet, where posts and comments do not feel like an endless hole in AI. But it starts to change and it threatens what Reddit says is in the competitive advantage.

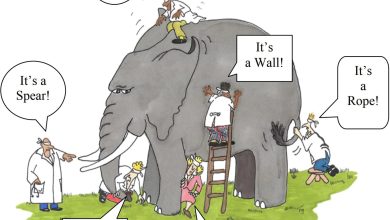

Reddit CEO Steve Huffman says that what keeps people coming back to the site is the information that is asked by real people who often give questions to the questions. Because the Internet is saturated with AI-generated content, Huffman says that Reddit communities that are curated and controlled by real people were distinguished from other social media platforms.

“The world needs community and shared knowledge, and we do it best,” Huffman told investors last week.

Reddit traffic has grown significantly over the past year, partly thanks to users who google specifically for the Reddit posts related to these issues.

Reddit's business model has increased attention after the company became public in March last year. Since then, Reddit has been promoting in his forums and ink to both Openai and Google so that their models can train Reddit content. In April, Reddit's stock fell after some analysts shared fear that the success of the company could be inextricably linked to Google search.

“Just a few years ago, adding Redd to the end of your search query, he knew the novel,” Huffman said in February's second quarter income speech. “Today, this is a common way to find reliable information, recommendations and tips.”

But now some Reddit users complain that the AI robots are uniquely infiltrated by human communities known to the site, or users are based on tools such as Chatgpt, which can often be noticed by formatting. Chatgpt loves bullet list and EM-Dash, and today it tends to be in positivity.

One of the community R/Singularity user, dedicated to the debate on AI's progress, recently noted a post about what they think was a generated user who distributed disinformation in July 2024 in the murder attempt by President Donald Trump.

“AI just took over Reddit's front page,” the poster noted.

And on April 28, Reddit's legal manager said the company would send “official legal demands” to researchers at the University of Zurich after they flooded a single site community for robots. The moderators of the R/ChangemyView Forum said in the post that scientists conducted an “unauthorized experiment” to explore how AI can be used to change views “.

The scientists conducted by the experiment said in the Reddit post that 21 of the 34 of their used accounts were “in the shade”, which means that their posted content would not be displayed to others. But they said that they never received communication from Reddit about the conditions of service violations.

The moderators called the experiment unethical and said that AI headed for the forum for some users in “personal ways they did not register”. According to the post, AI went to an extreme length in some posts, including pretending to rape, posing as a black man with black lives, and posing, among other things, as a person who received unusual care among other claims.

“Psychological manipulation risks caused by LLMS are a thoroughly studied topic,” the community moderators wrote. “There is no need to experiment with non -connecting human sites.”

A spokesman for the University of Zurich told Business Insider that the school was aware of the study and investigated. A spokesman said the researchers decided not to publish the results of the study “at their own request”.

“With these events in mind, the Ethics Committee of the Faculty of Arts and Social Sciences intends to adopt a stricter review process in the future and, in particular, coordinate before experimental research with platform communities,” the spokesman said.

For the Reddit business strategy, which is largely focused on advertising and the conviction that it offers the best research because it is based on the actual human reactions, AI is at risk of the platform. And Redd has noticed.

On Monday, Huffman said in a Reddit post that the company would use third parties to “keep the Reddit human”. Huffman said Reddit's “strength is its people” and that “unwanted ai of communities is a serious concern.”

“I haven't posted for a while – and let's face it, when I appear, it usually means something laterally (and if it hasn't gone to the side, it probably starts),” Huffman said.

Third -party services now ask that more information, such as their age, is created by users, such as their age, Huffman said. Specifically, “We need to know if you are a person,” he said.

A spokesman for Reddit told B that the Zurich experiment was unethical and that Reddit automated tools marked most of the related accounts before the end of the test. A spokesman said that Reddit always works on detection functions and had further specifying its processes after the experiment.

However, some Reddit users claim that they are tired of what they see as “LLM robots proliferation in the last 10 months”.

“Some of them mimic the users' brains, offering one -word answers to the emoticons at the end,” one user wrote. “They post at an unnatural frequency, largely sub -layers known for voting.”