Emergence, Not Design, Is Powering AI’s Human-Like Abilities

The concept that links the origin of life to Microsoft's agreement with Openai is illusory. Contradict intuition and belief, but promising science fiction close to science fiction

One of the smartest people living with us is a 96 -year -old teacher named Noam Chomsky.

He is a philosopher, a political thinker, some say an anarchist but above all – one of the most (if not) famous and influential linguists of all time. He developed the theory of the language acquisition system (LAD). He has shown that all languages share common grammatical features – have a universal grammar (UG) – which is intuity in early childhood and does not require learning. He argued that our brain had developed a linguistic center dedicated to our human capacity to communicate with each other, similar to the centers for vision, hearing and feeling.

He was (probably) wrong.

The intertwined stories of design, adaptation, random, specialization and emergence are difficult to tell. It is wide (extending physics to psychology), it is debated (among researchers and laymen), it is sensitive (intuition and difficult religion), and it is expensive (billions of dollars are at stake).

I will try to simplify and round some corners to adapt to the format. It will not be the history of the universe, but it will start with its birth.

An emerging property of the universe

It all starts with entropy.

Or in fact – his absence.

Entropy is disorder. But when it all started – at Big Bang – there was no entropy. All things – matter, energy, time – were well wrapped together seated in peace and in order. Then * Bang * – All dispersed and from this point, the universe took the form of my boys' room – a huge bunch of chance.

And in this chance, on a very long period of time, a sufficiently large number of elements and a constant set of rules – here and there, things have become less random and have started to take shape. The atoms tight in molecules, molecules in cells and organ cells within creatures.

“Wait, stop. It can't be fair. How a space full of random atoms entering others produces such a specific thing like a teenager loving manga bearing nike, send sms to an iPhone? ”

This is known as the emergence.

Emergence is the phenomenon where complex systems have properties, behaviors or models that are not present in their individual components. These new properties “emerge” interactions between the simplest parts.

Not to be confused with design. Which is determined and planned.

If you feel that “the universe is too incredible and complex not to be planned by something”, you are in good company. 84% of people still believe in a higher power order in everything.

Difficult to perceive and everywhere

The emergence is all around us. Molecules having the ability to complicate (by chance) are better to become abundant in an environment and to emerge as a “life”.

The random variations (mutations) generate features for proliferation in the environment. Adaptation (the evolution of lines) makes a tuft of cells capable of distinguishing light from darkness. A few million years and levels of complexity – emerges the ability to see. Billions of cells connected by electric wiring and soaked in chemicals emerge the ability to store and then generate abstract concepts.

Several such regions in the brain, each capable of generating different abstractions, can emerge language. It is understandable why we assume that there is a region dedicated in the brain that is responsible for it. Not so long ago, we thought that a certain pineal gland is where our soul comes from.

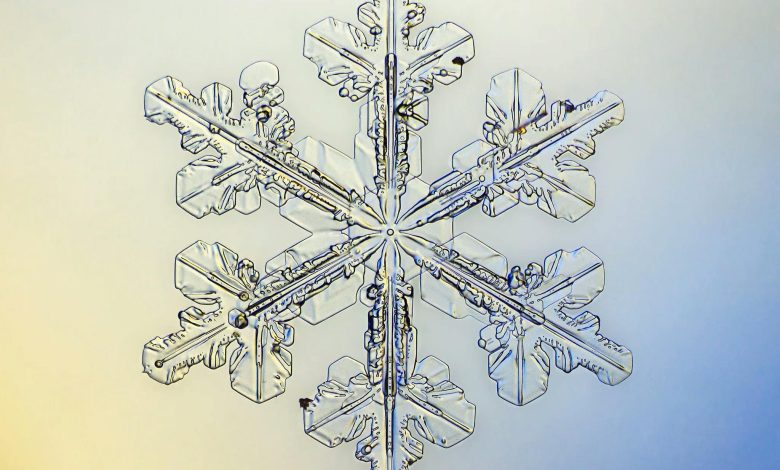

There are two types of emergence – simple and complex. The simple is the one we can predict which could emerge from its parts (snowflakes from water molecules). The complex is the most difficult to “guess” the basic complexity (consciousness of neurons).

Surprisingly, the emergence in fashion, which we mean daily (or fear) in the news belongs to the simple type.

Emerging phenomena – also digital

This principle of emergence extends beyond natural phenomena to the digital domain. Large language models (LLM) – The backbone of popular AIs – are extremely complex entities. And from the complexity, we can expect (be sure) – emerge from the phenomena that we have not built or planned (not “designed”). LLMs are not only linguistic engines – algorithms that know what words come up grammatically. They were trained on the wild language – not in a laboratory.

So what can we expect to emerge from a wild language? What is there who is not knowledge or facts? Here is a list –

- Code – People write code using language – words, numbers and “grammatical” rules.

- Mathematics – People describe mathematics (and chemistry and physics) using words (numbers) and mathematical grammars

- Logic – People describe thought, reasoning and order using language

- Emotions

- Intuition

- Opinion – All appear in the language

This is a partial list. And all these things do not appear at random.

I remember a book that I read in adolescence called The Notebook (The Grand Cahier) of Ágota Kristóf. It is written in the plural in the first person and is deliberately empty of emotions (as the protagonists of twins say: “We do not understand why we say” I like to eat apples “and” I like my mother “with the same verb”). It is so rare that my usually contested memory has this quote for decades.

Thus, we can expect the LLM “will emerge” the capacities to –

- To write code

- Excel mathematics (and chemistry and physics)

- Display logic

- Show emotions

- Have an intuition

- Have opinions

Where we are now holding is at number 3 of 6.

AIS are already competent in code, mathematics and logic, and these skills should improve more.

Emotions, intuition, opinions – expect to follow. Although I can imagine why their creators (Openai, Google, Apple) will plan to remove them (and Elon Musk, X.Ai Grok will not …).

Does that mean that they adopted these capacities? Advanced features to better adapt an environment of humans around them? That their creators have “designed” these features?

No.

These are emerging capacities “jump” with complexity and that their origins hidden in the language sources with which the LLMs are fed.

This is also where the difference is.

The emergence is not an organ, but is it important?

Living creatures are built in “things” – organs, chemicals, electricity, environment, community, biome – which produce complexity from which new things sometimes emerge – like language.

The reverse engineering language does not create “things” – this creates their shadows. Organs, chemistry, electricity and the environment are not there to produce emotions or motivation.

Is it important? Maybe the shadow is detailed enough to be “the thing”? After all – we emerged the ability to fly without spinning anything and have exceeded each bird in the sky.

I maintain that it makes a difference (pick up sides in the Plato dilemma).

No matter how many works of art we see – we do not emerge artists -capacity. We do not become motivated, talented or hunted with examples.

So, if I was Microsoft, having a participation of several billion dollars in the question, I would say “yes, but has an organ act?”. The rest of us can expect a wild ride with many human capacities emerging from old school a (g) i.