Structured LLM Prompts Drive Better Results with COCOGEN

Link

Abstract and 1 Introduction

2 Cocogen: Represents Commonsense structures with code and 2.1 Convert (T, G) to the Python Code

2.2 Some shots that motivate for the formation of g

3 Analysis and 3.1 Experimental Setup

3.2 Script Generation: Proscript

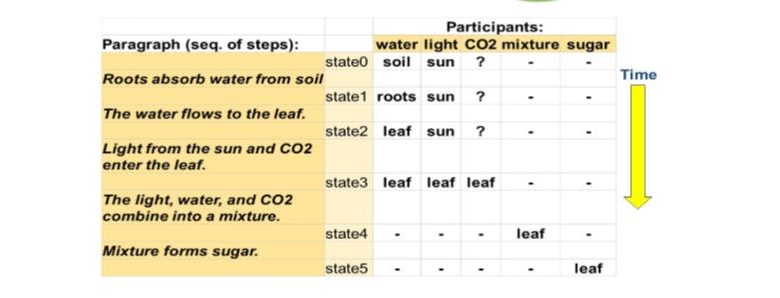

3.3 Monitoring State of Entity: Propara

3.4 Generation Graph Generation: Poets

4 Analysis

5 related work

6 conclusions, recognition, limits, and references

Some estimates of the size of the models

B Dinamic Creation of Creation

C analysis of the person

D DIASTET STATISTICS

E sample output

F signals

G Python class designing for a structured task

H impact of model size

I have changed the signals

4 Analysis

Structured signals compared to code-llms Which component is more important, using a code-llms or the structured input formatting as code? To answer this, we experiment with a text prompt with a code-LLM codex, and a code prompt with an NL-LLM, Davinci. Table 5 shows that both contributions are really important: Performance

Improves for NL-LLM Davinci both when we use a code prompt, and when we use a code-llm. However when using both a code-llm and a code prompt-the improvement is greater than the whole of each of them only.

The results suggest that the effectiveness of dynamic signals depends on both training and task data. In the sub-guessing side of the side proscript, the edges between events in similar scripts are useful, and Table 6 shows that the model has been able to effectively use this information. In the sub-task of the generation of the Proscript script, Table 8 shows that KST also provides acquisitions (Appendix B).

In expondraphs, we noticed that training data has many examples that are almost identical, and thus dynamically created signals that often include double examples, which effectively reduces the difference and immediate size (Table 9).

Python formatting We conducted an extensive study of the impact of the Python format on the performance of the Downstream work on Appendix G. We found that: (I) There are no clear Python class designs that work well; And that (ii) the larger models are less sensitive to promompt (python class) design. Overall, our approach benefits most code formats similar to possible at standard code conventions.

Human analysis We conduct human analysis of graphs generated by cocogen and davinci to increase automatic metrics. Results (Appendix C) indicate that human analysis is closely associated with automatic metrics: for explinraphs, graphs generated by cocogen are found to be more relevant and correct. For the generation of Proscript, both Davinci and Cocogen have auxiliary strengths, but cocogen is generally better in terms of relevance.

Those with -set:

.[email protected]);

(2) Shuyan Zhou, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(3) Type waves, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(4) Yiming Yang, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(5) Graham Neakay, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]).